Cancer patients’ medical records can contain up to 100 gigabytes of heterogeneous data, such as blood and tumor values, personal indicators, sequencing and therapy data, and more. Due to a lack of proper processing techniques, efficiently managing such a large database is difficult.

As a result, the potential of a personalized treatment approach for each cancer patient remains essentially theoretical; the vast majority of cancer patients continue to undergo standard therapies. The German Cancer Research Centre (DKFZ) now wants to modify this therapy technique using quantum computing.

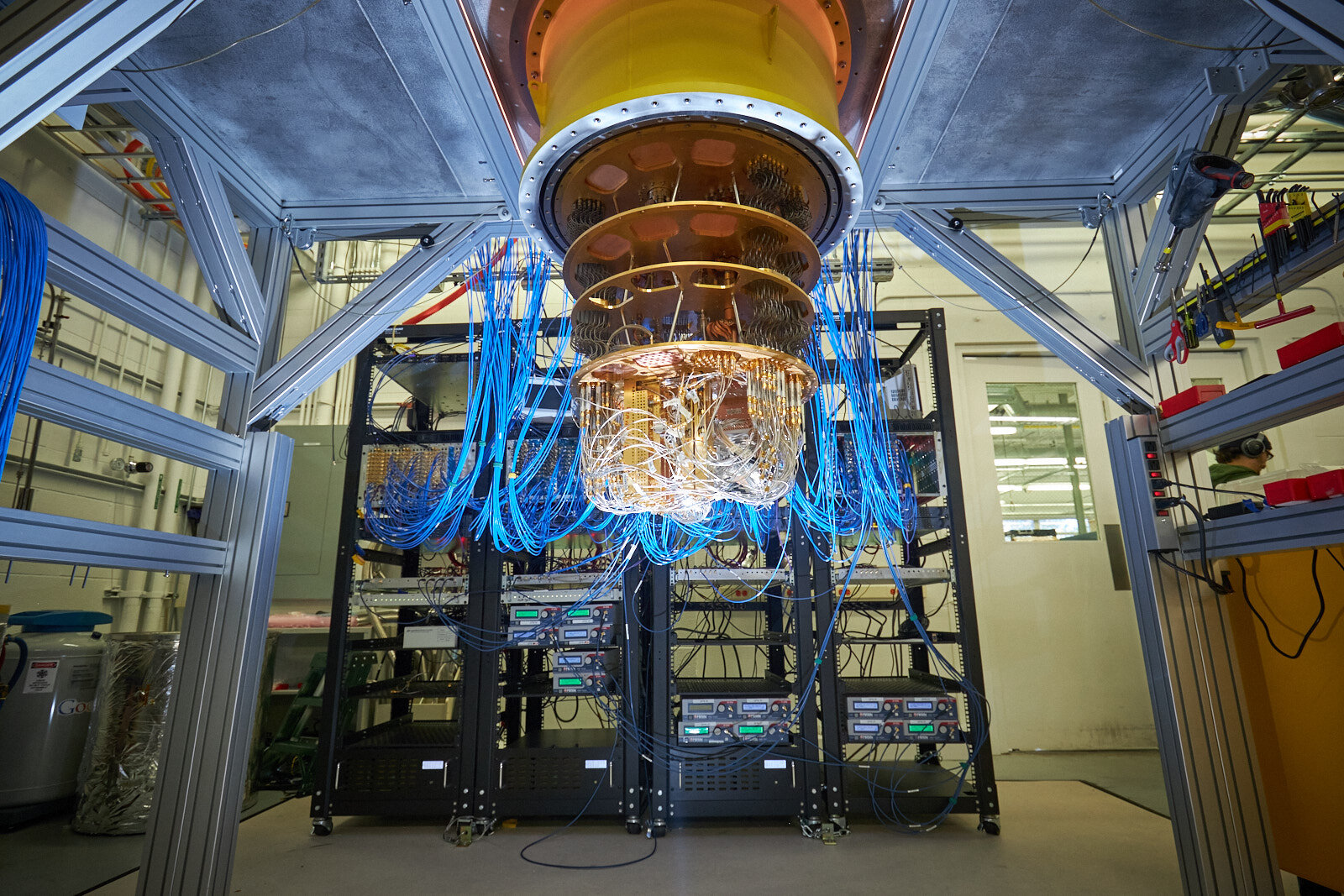

Previously, the DKFZ team had done some work with other available devices that emulated qubits. When working on an actual quantum computer, such as the IBM Q System One, there is a significant difference. Read Also: iPad Air 2022 Review: Why You Should Need iPad Pro

The quantum computer, according to Dr. Halama, allows you to understand how stable and intricate things are, as well as what may be done to prevent mistakes.

The researchers seek to use an application-oriented strategy to further develop and bring forth new concepts based on these benefits. The goal is to figure out not just which algorithms are best for processing the data in question, but also how error corrections might be improved.

Quantum Computing Really Helped in Data Protection, Speed and Flexibility

Data security, speed, and adaptability are three important factors when dealing with a quantum computer. Scientists are now working on test data. Read also: This Smart Skin Can Enable 5G+ in Any Structure

However, when real patient data is utilized in the future, the fact that the data on the quantum computer functions under German data protection regulations and remains on site will be extremely useful.

When it comes to cancer patients, quantum computing’s improved computation speed (in compared to traditional computing) is critical for speedy decision making.

Because quantum processors can process data in parallel rather than sequentially, they offer the potential to analyze large amounts of data in a fraction of the time required by traditional computers.